Can we finally detect AI-written content? In the past months, we’ve seen the launch of many tools that try to detect AI-written text.

However, only yesterday OpenAI, the company behind ChatGPT, launched its own text classifier that aims to distinguish between AI-written and human-written text.

This is a free tool that has some limitations but it can still help you detect whether something was written by AI.

I’ve tested this tool with both human-written text and AI-written text. Here are the results.

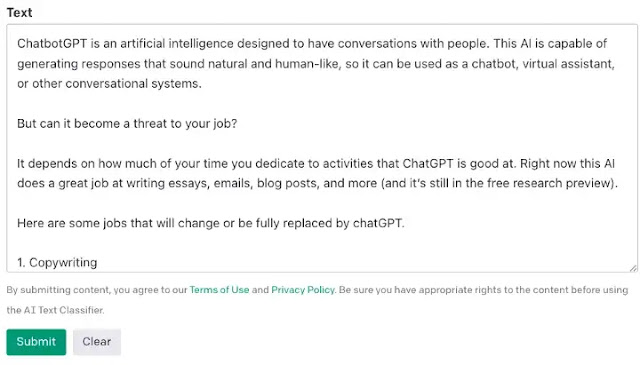

Testing the AI Detector With a Text Written by Me

Let’s start by testing OpenAI’s new AI detector with a human-written text, but before we try this out, we have to keep in mind the limitations of this tool:

- It requires a minimum of 1000 characters

- The classifier isn’t always accurate

- The classifier was primarily trained on English content written by adults

One reader said that it was written by ChatGPT in the comment section. Let’s find out whether it’s an AI-written text.

Here’s the result.

Here are the 5 categories used by OpenAI with their score.

- Very unlikely to be AI-generated (<0.1)

- Unlikely to be AI-generated (between 0.1 and 0.45)

- Unclear if it is AI written (between 0.45 and 0.9)

- Possibly AI-generated (between 0.45 and 0.98)

- Likely AI-generated (>0.98)

where 1 indicates high confidence that the text was generated by AI, and 0 indicates high confidence that the text was generated by a human.

So, according to this tool, the text was written by me.

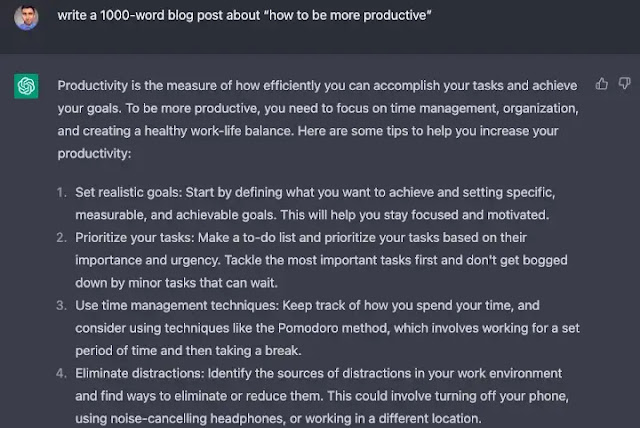

Testing The AI Detector with an AI-Written Text

Now let’s see what happens if we test the detector with a text generated by ChatGPT. I’ll start with a generic 1000-word article about productivity.

Here’s the result of the test.

The text fell into the “Possibly AI-generated” category (score between 0.9 and 0.98). This means that there’s a possibility that the text was generated by AI.

We know that this “possibility” is actually a reality. Still, not bad.

Let’s run a new test with a more complex text generated by ChatGPT.

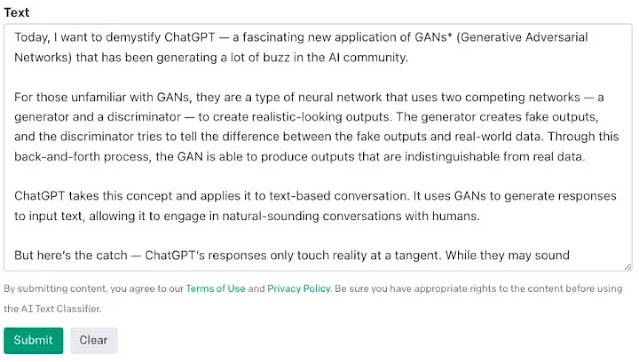

I’ll copy the first section of the article Introducing ChatGPT!

Pay attention to the prompt used to generate the text (it’ll help you understand the results later).

Write a witty blog post demystifying ChatGPT in the style of Cassie Kozyrkov. Explain why it’s useful, how it relates to GANs, and why its output only touches reality at a tangent.

Let’s run the test.

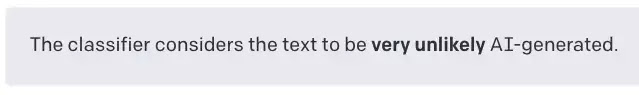

Here’s the result

It seems that this tool isn’t perfect! OpenAI knows that and it recognizes that its tool is not fully reliable.

But why did the first text pass the test, while the second fail? Aren’t both generated by ChatGPT?

I believe part of the answer is in the prompt. While I was very straightforward in the generation of the productivity blog, Cassie customized more the prompt.

I’ve highlighted some keywords that might’ve helped ChatGPT generate text that fooled OpenAI’s detector.

Write a witty blog post demystifying ChatGPT in the style of Cassie Kozyrkov. Explain why it’s useful, how it relates to GANs, and why its output only touches reality at a tangent.

That’s only one way to fool an AI detector.

My goal isn’t to encourage you to fool AI detectors, so if you’re curious to know other ways, do your own research.

The Verdict

In this article, we’ve seen text entirely written by ChatGPT labeled as both “Possibly AI-generated” and “Very unlikely to be AI-generated,” so we only verified what OpenAI says:

It’s impossible to reliably detect all AI-written text

Let alone AI-written text edited by humans!

It’s no surprise that when OpenAI evaluated this tool, it only correctly identified 26% of AI-written text as “likely AI-written.”

It seems we’re still a long way to having a fully reliable AI text detector. For now, we hope OpenAI can improve its work on the detection of AI-generated text.