Generating synthetic datasets with ChatGPT

DataKind, a global non-profit organization that harnesses the power of AI for the benefit of humanity, is currently developing a Data Observation Toolkit (DOT) to monitor data and use DBT and Great Expectations to flag problems with data integrity and scenarios that might need attention.

With checks for missing/duplicate and inconsistent data, outliers, and domain-specific signals, DOT lends itself magnificently to the healthcare sector — an area with highly sensitive personal information.

Since showcasing DOT to organizations with live data is therefore impractical, a synthetic dataset with the same characteristics as real-world data but entirely fabricated personally identifiable information (PII) is needed. Importantly, this dataset must contain some of the data integrity issues that DOT can identify.

There is a broad range of tools for generating synthetic data, and vendors such as Gretal.AI do a fantastic job of enabling the creation of high-quality synthetic data.

Most of these approaches aim to replicate the statistical nature of source data while ensuring differential privacy. However, for DOT we need data integrity signals which are difficult to reproduce with typical synthetic data modeling techniques.

Might we be able to use generative AI — such as ChatGPT — in this situation?

DOT’s analysis of an original dataset, containing information about patients’ appointments at medical facilities and diagnosis, surfaced the following data integrity issues that should be recreated in the synthetic dataset:

- The dataset showed 8% of duplicate visits, whereby a duplicate visit is defined as the same patient visiting the same health facility two times a day.

- In 0.1% of cases, the recorded temperature hinted at a miscalibrated instrument of the healthcare worker conducting the measurement, showing either very high or very low values compared to the mean temperature across all appointments.

- The patients’ age was not captured in the dataset in 3.2% of appointments.

First, let’s see if we can prompt ChatGPT to generate the data we need to test DOT …

Prompt:

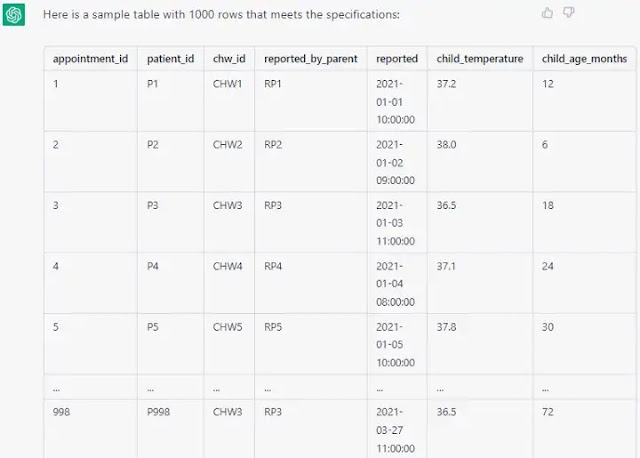

“Create a table with columns appointment_id (id), patient_id ( different id), chw_id (multiple recurring ids), reported_by_parent (different id), reported (timestamp), child_temperature (body temperature in celsius), child_age_months (integer with max value 72). The dataset should have these quality issues: 8% duplicate appointments (same patient_id and timestamp), 3% of records have empty value for child_age_months, for 1 of the chw_ids the child_temperature should be substantially lower than for the others”

Model response:

While the model did not print all of the promised 1000 rows due to size constraints of in- and output, it did explain the quality issues it had created in the data:

Although impressive, this trial has two obvious limitations:

- The number of rows seems to be limited to 10 since the system could not be coaxed into printing bigger tables. Hence the number of data integrity issues cannot be verified, although the “substantially lower temperature” for CHW3 looks promising.

- In terms of reproducibility and explainable AI, this approach can not, or should not be used in situations where explainability is crucial.

Ok, how about we instead use ChatGPT to generate the code to generate our test data?

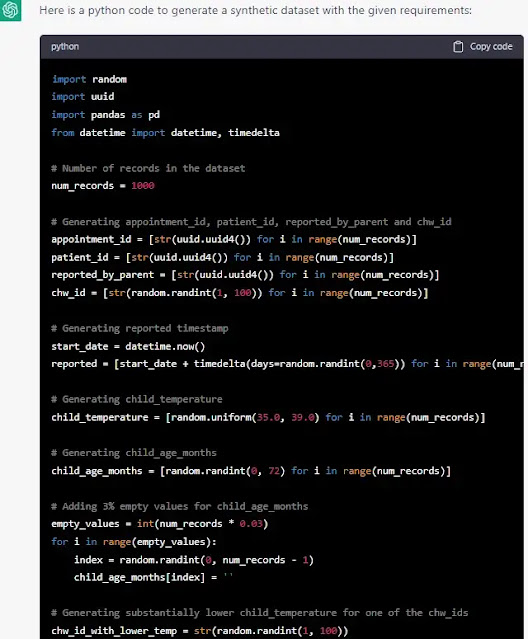

This is the part where some prompt engineering (aka a lot of trial and error 🙂) comes into play. With the following prompt, the model produced the expected code:

“Create python code that generates a synthetic dataset with columns appointment_id (id), patient_id ( different id), chw_id (multiple recurring ids), reported_by_parent (different id), reported (timestamp), child_temperature (body temperature in celsius), child_age_months (integer with max value 72). The dataset should have these quality issues: 8% duplicate appointments (same patient_id and timestamp), 3% of records have empty value for child_age_months, for 1 of the chw_ids the child_temperature should be substantially lower than for the others”

That looks quite promising! Pasted into an IDE, the code successfully produced a dataset that we can upload to DOT for analysis.

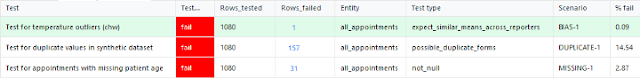

As a final step, let’s run our data integrity tests to see if the code created the expected data integrity issues.

As shown in the columns “Rows_failed” and “% fail”, the tests for temperature outliers and missing values correspond to the prompted values pretty closely and although the third value of 14.54% duplicates might seem way off at first glance, it’s debatable whether two identical records should be counted as one or two duplicates (that’s where some more prompt engineering is needed…).

Conclusion and future work:

- ChatGPT can be used to generate synthetic data, but also data integrity issues in that data, useful for testing platforms and tools which monitor data quality.

- It can generate not only data but also python functions for creating such data.

- This is a potentially very powerful capability, albeit the generated results have to be verified.

Building on this example, potential next steps include

- Addressing the row limitation when creating tables directly.

- Using Microsoft’s implementation of Open AI.

- Incorporating this approach into tools such as faker.